Autonomous Pathfinding Robot (RBE 3002)

RBE 3002 - Unified Robotics IV

Introduction

Course objectives: Fourth of a four-course sequence introducing foundational theory and practice of robotics engineering from the fields of computer science, electrical engineering and mechanical engineering. The focus of this course is navigation, position estimation and communications. Concepts of dead reckoning, landmark updates, inertial sensors, and radio location will be explored. Control systems as applied to navigation will be presented. Communication, remote control and remote sensing for mobile robots and tele-robotic systems will be introduced. Wireless communications including wireless networks and typical local and wide area networking protocols will be discussed. Considerations will be discussed regarding operation in difficult environments such as underwater, aerospace, hazardous, etc. Laboratory sessions will be directed towards the solution of an open-ended problem over the course of the entire term.

Project objectives: Using the TurtleBot3 robotics platform, explore and map an unknown environment. The robot should be able to localize itself in the world, drive around the environment without hitting obstacles, expand areas of interest on the map, know when the map is complete, and find an optimal path to an arbitrary point within the world. The testing environment was atop a large table with solid boxes acting as the walls of a randomly-created maze.

The project was split into three phases:

- Create a map of the environment.

- Calculate C-Space.

- Find a frontier to explore.

- Plan an optimal path to the frontier.

- Navigate to the frontier and avoid obstacles.

- Repeat until a complete map is created.

- Save the map.

- Using the map, navigate back from the current position to the start position while avoiding obstacles.

- Navigate to a specific goal position within the maze. This position is chosen at random by the professor.

Programming

This robot was programmed using Robot Operating System (ROS), which is an open source framework (more of a middleware) that supports robotics research and development. Its flexibility and ease-of-use make it a leading solution in the robotics industry, with companies such as iRobot or ABB adopting some form of ROS. At its core, it enables communications between submodules or nodes in the code base. In this application, ROS runs both locally (aboard a Raspberry Pi on the robot) and “server-side” aboard a PC. Communication between the PC-side and robot-side of ROS is enabled by WiFi.

The TurtleBot3 platform features a 360 degree LiDAR, a 6-axis inertial measurement unit (IMU), precision Dynamixel drive servos, and powerful onboard computation. With these features and ROS, we effectively have a robot that can accomplish the project objectives of mapping & navigating a maze.

The primary navigation stack that ROS provides lays the framework for mapping (Gmapping), localization (AMCL), and navigation (move_base). Our ROS implementation for this autonomous robot was structured to operate using nine core nodes with eight topics and three services to allow communication between nodes. One main node manages the implementation of the ROS navigation stack and acts as a task scheduler (finite state machine) for the different phases of the project. For optimal path planning, we implemented a version of the A* algorithm using Euclidean distance between map nodes as the primary heuristic.

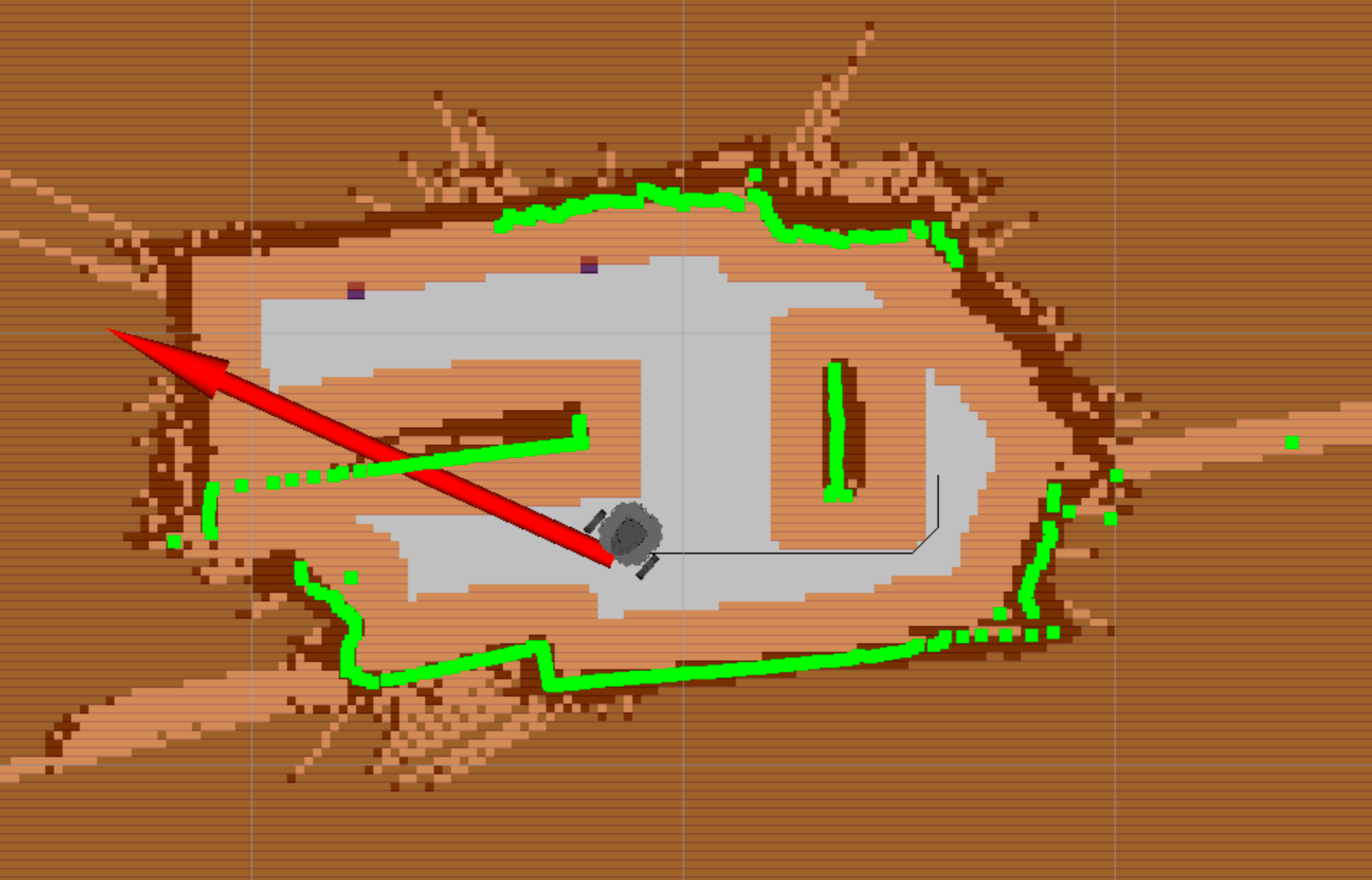

The subcomponents of our code were developed incrementally throughout the seven-week term and the biggest challenge was the full integration of all our components. Below you’ll see an RViz capture of the map generated from our program from a real world test.

See the full code on GitHub.

I’ve already created a write-up of the project, where you can find more details about the code and math developed in this project. Below is also an embedded version of the final report.

Conclusion

This project turned out to be a great success and was one of my favorite projects in my robotics curriculum. In fact, my team and I were able to achieve the fastest time among several other teams to complete Phase 3 runs. I really enjoyed this project as it felt very “rooted” in the real world with a suite of advanced sensors and software packages that are used similarly to develop autonomous robots/vehicles in the real world. While gmapping and AMCL did hide much of the SLAM process in a “black box”, the implementation to intelligently path find using this data was the main takeaway from this project. Processing through and filtering out noisy data and navigating while localization shifted the robot position were the real challenges to overcome.